Share

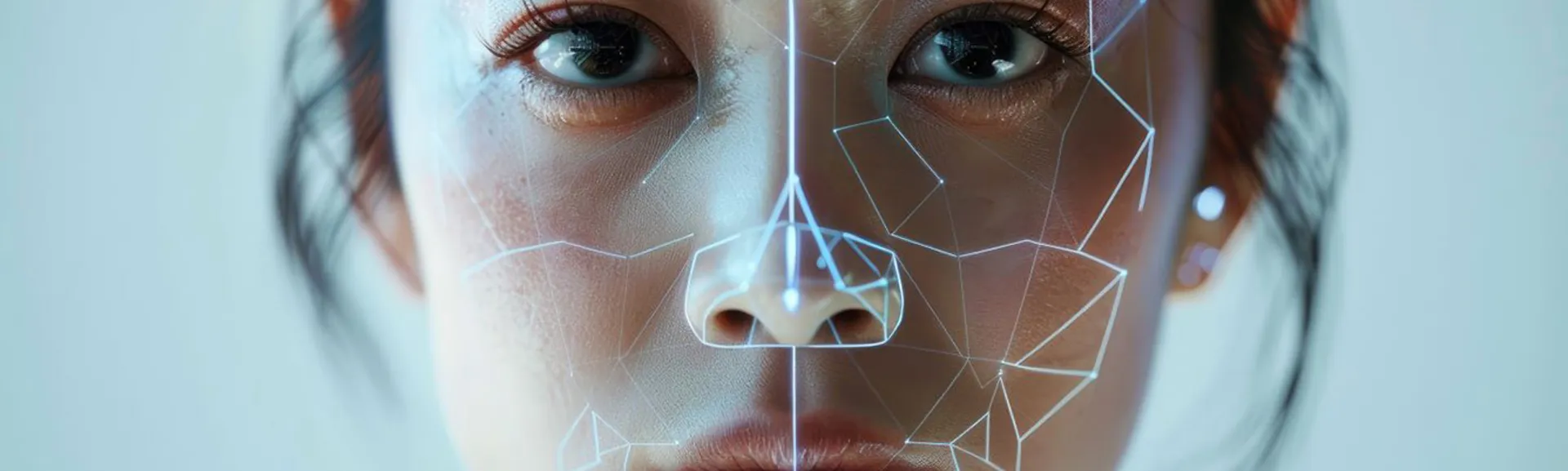

Deepfake fraud has officially reached an “industrial scale,” according to chilling new analysis by AI experts.

The report, highlighted by The Guardian, warns that tools used to create hyper-realistic, tailored scams are no longer the playground of elite hackers. Instead, they have become inexpensive, widely available, and simple enough for “pretty much anybody” to deploy against the public.

Researchers at the AI Incident Database have catalogued a surge in “impersonation for profit.” Recent examples include sophisticated heists where deepfake videos of politicians were used to hawk fake investment schemes and AI-generated “doctors” to promote medical scams.

The financial toll is staggering: UK consumers alone are estimated to have lost £9.4bn to fraud in the nine months leading up to November 2025.

MIT researcher Simon Mylius claims that the barriers to entry for producing deepfakes have effectively disappeared. “Capabilities have suddenly reached that level where fake content can be produced by anyone,” he warned. Meanwhile, Harvard experts suggest that AI models are evolving far faster than security experts anticipated, making detection a constant game of cat-and-mouse.

One recent high-profile incident involved the CEO of AI security firm Evoke, who nearly hired a “talented engineer” following a video interview. It was only after noticing the candidate’s “soft edges” and a glitchy, fake background that a technical analysis confirmed the individual was a deepfake.

While the motive remains unclear, whether it was a play for a salary or a grab for trade secrets, it serves as a warning that no business is too small to be targeted.

In response to this growing national security threat, the UK Government recently announced a “world-first” deepfake detection initiative. As detailed on Tech Digest, the Home Office is partnering with Microsoft, academics, and technical experts to build a standardized evaluation framework.

This collaboration aims to establish consistent industry standards for identifying manipulated audio and video, bridging the gap between theoretical AI models and the real-world tools needed by law enforcement.

With an estimated eight million deepfakes shared in 2025 – a massive leap from just 500,000 two years ago – the new framework with Microsoft is designed to identify gaps in current detection tools before the next wave of AI-driven fraud hits the mainstream.

UK government announces deepfake detection initiative with Microsoft

Related Posts

Discover more from Tech Digest

Subscribe to get the latest posts sent to your email.